Random Forest Assignment Help (Ace Your Grades with Random Forest Homework Help)

Order Now

Why Choose The Statistics Assignment Help?

On Time Delivery

Plagiarism Free Service

24/7 Support

Affordable Pricing

PhD Holder Experts

100% Confidentiality

Professional assignment writing services. On time delivery. Statistics Assignment Help expert did an extensive research on the subject, including images and diagrams, as well as providing enough examples to support the content. Thank you.

A friend had recommended this portal to me when I struggled with my Random Forest assignment. The price structure was affordable, so I thought I'd try it out.

My gratitude goes out to The Statistics Assignment Help for helping me complete my Random Forest Assignment and submit it on time because I wouldn't have been able to do it otherwise. The solution was written following my prescribed citation style and contained all the information I needed.

Random Forest Assignment Help | Homework Help

Do you have to write random forest assignments as part of your machine learning course? Could not spend a lot of time researching the topic that is assigned by the professor? Then, without a second thought seek the help of our experts. They are available round the clock to offer you the required help. They understand the requirements that are given by the professors and solve the Random Forest assignments accordingly. The solutions prepared by our experts will help you to secure A+ grades in the assignments and exams. You no more have to worry about these tasks instead can invest the time you spend on solving the assignments to prepare for the exams. We have the best Machine Learning and Data Science experts to offer quality Random Forest assignment help.

What is Random Forest?

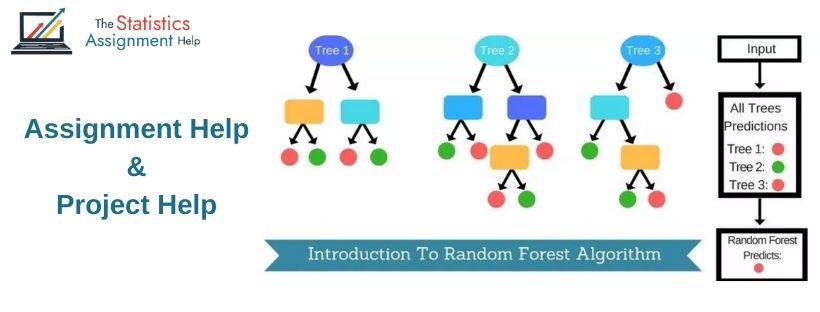

Random forest is a highly flexible and simple to use machine learning algorithm that produces accurate results. Moreover, it is a widely used algorithm due to its simplicity and diversity. This learning method would use different decision trees. The final decision would be taken based on the majority of the trees that are chosen by the random forest algorithm. Similar to the other machine learning techniques, even the random forests would make use of the training data to predict the future outcome. The random forest would make use of bagging where each tree would learn from the training data. The forest would have thousands of trees.

Why Use Random Forest?

The reasons to use random forest include:

- The random forest algorithm can be used to perform both the regression as well as the classification tasks

- Gives high accuracy by doing the cross-validation

- The random forest classifier would handle the values that are missing and would maintain the preciseness of huge chunks of data

- If the trees are a lot, then it would restrict the over-fitting trees to embed in the model

- It can handle huge chunks of data that have high dimensionality.

How does the Random Forest work?

The random forest is the supervised learning algorithm and is built with a large number of decision trees that are trained with the help of the bagging method. This method is a blend of different learning models that would give you an accurate result. The random forest would let you construct multiple decision trees that are merged together to give an accurate prediction. The best thing about the random forest is that it would solve classification and regression problems briskly.

The random forest would possess the same hyperparameters as the decision tree or the bagging classifier. However, there is no need for you to blend the decision tree with the bagging classifier as it becomes easier for you to use the classifier-class random forest. With the random forest, it becomes easier for you to complete the regression tasks with the help of an algorithm regressor. There is a randomness that is added to the model with the growth of the tree. In the random forest, the features of the random subset would be considered by the algorithm to split the node. You can make the trees random with the help of random thresholds.

The best way to explain the working of random forests is through an example. James is the person who would like to go on a vacation every year. He takes suggestions from his friends and known ones. The first friend whom he approached would ask about his likes and dislikes based on past travel experiences. With the answers provided by James, the friend would give some valuable advice on travel. The friend has made use of the typical decision tree approach. The friend of James has set the rules to help him with the right decisions based on the answers received from James. Later, James consulted many other friends to take their suggestions on his next travel plan. They have asked him different questions. Finally, James has chosen the destination that is mostly recommended by all of his friends.

There is another example that can better explain the working of a random forest. There are thousands of random trees that are created for which there is a hand. Every random tree would anticipate a different outcome for the same features. The small portion of the forest would take a close look at the random features such as hands or fingers. The randomly picked decision trees would give you some unique features about thumb, fingers, and humans. The votes that you get for the finger would be calculated based on random decisions. The same thing is applicable to the thumb and humans. If the number of votes for the finger is higher, then the predicted target would return as the finger.

Understand all the intricacies of model building and applications by choosing the best random forest assignment help from The Statistics Assignment Help.

Uses of Random Forest Analysis

Students who are pursuing machine learning courses must be thorough with this topic. Here are a few applications where the random forest algorithm would be used

- Banking sector - The banks will have different customers. Few would be loyal to the bank while a few would-be fraudsters. If you want to determine whether the customer is a genuine one or not, then you must do a random forest analysis. This algorithm in machine learning would determine whether the customer is loyal to your bank or is a fraudster. There are a series of random algorithms that are used to identify fraudulent transactions with a series of patterns.

- Medicines - The medicines are prepared with different ingredients. A few of them would be natural while a few others would be chemicals. You can identify the combination of chemicals used in preparing the medicine; random forest analysis is used. With the help of this machine learning algorithm, it becomes easier for you to learn about the drug sensitivity of this medicine. You can also learn about the disease of the patient just by going through past medical records.

- Stock market - Machine learning is playing a critical role in stock market analysis. If you want to learn about the stock market behavior, you can make use of the random forest algorithm. It will analyze the stock market behavior in no time. You can know the profit and loss that you can reap by purchasing a specific stock.

Do not have enough time to write the random forest assignment, submit your assignment to us. De-stress yourself from the worries of solving assignments by availing the instant and best Random Forest assignment to help

Why To Hire Our Random Forest Assignment Help?

We are emerging as the best Random Forest assignment help provider in the market offering superior quality solutions to students globally. Students choose our statistics assignment help services because:

-

Assured on-time delivery: We have a high success rate of delivering the assignments before the given deadline to students. We submit them before so that students and review them and get back to us for rework on it.

- 24/7 help: We offer round-the-clock support to students in handling their queries.

- Subject Matter Experts: We have an ensemble team of Machine Learning homework Help statisticians do the assignment for you. Our interview process is very rigorous and we hire only experts who can deliver a quality solution that would improve the grades of students.

- Limitless free revisions: We are known for delivering quality solutions to students. However, if you are not happy with the final draft, you can come to us anytime for revisions. We revise the content as many times as you want and until you are happy with the output without charging anything extra from you.

- 100% plagiarism-free: You find no trace of plagiarism in the assignment we submit to you. We check the content multiple times prior to emailing you.

If you want to get your assignment done by skillful experts, you can call us without waiting any longer.

Frequently Asked Questions

A supervised learning algorithm is a random forest. It creates a "forest" out of an ensemble of decision trees, which are commonly trained using the "bagging" method. The bagging method's basic premise is that combining several learning models improves the overall output.

In random forest, hyperparameters are utilised to either improve the model's predictive capability or make it faster. Let's take a look at the sklearn random forest function's hyperparameters.

-

Improving the model's predictive ability

-

Improving the model's speed

A "Random Forest Classifier" is a classification technique comprised of many decision trees. To promote uncorrelated forests, the algorithm leverages randomization to form each individual tree, which then uses the forest's predictive powers to make accurate decisions.

The following are some of the benefits of using random forest:

-

Both regression and classification tasks can be performed using the random forest technique.

-

By using cross-validation, it achieves a high level of accuracy.

-

The missing values would be handled by the random forest classifier, which would retain the precision of large chunks of data.

-

If there are a lot of trees, it will be difficult to integrate over-fitting trees in the model.

-

It is capable of handling large amounts of data with a high dimensionality.

Random forest is a machine learning technique that generates reliable results and is both flexible and simple to use. Furthermore, because of its simplicity and versatility, it is the most extensively used algorithm. Different decision trees would be used in this learning process. The ultimate conclusion would be made based on the random forest algorithm's selection of the majority of trees. The random forests, like the other machine learning algorithms, would utilise the training data to predict the future outcome. Bagging would be used in the random forest, with each tree learning from the training data. There would be thousands of trees in the forest.